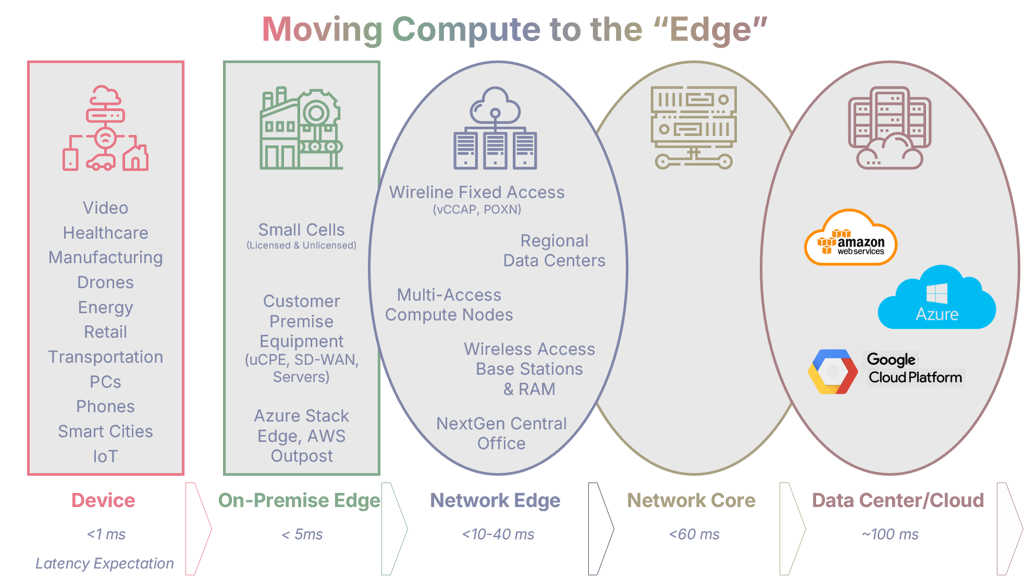

Edge computing refers to processing and analyzing data at the edge of a network, closer to where the data is generated. By doing so, edge computing minimizes latency. It reduces the amount of data that needs to be transferred back and forth between a centralized cloud server and the devices generating the data. Edge computing enables real-time decision-making and improves the performance of applications that require low latency, such as autonomous vehicles, industrial robotics, or remote monitoring systems.

Different types of edge computing are based on their proximity to the data source. Near-edge computing involves processing data at the network's edge, near the data source. Far-edge computing refers to processing data at the network's outer edge, often at remote locations closer to the data source than centralized cloud servers. Devices can also have edge computing capabilities built into them, allowing them to process and analyze data locally before transmitting it to the cloud. Cloud providers now offer edge computing services, distributing their infrastructure closer to the network's edge to enable faster processing and reduced latency. Finally, regionalized data centers and on-premises edge infrastructures are available for organizations that want to keep their data and processing capabilities local.

Various players in the tech industry are implementing edge computing in different ways. Hyperscalers, such as Amazon Web Services, Microsoft Azure, and Google Cloud Platform, deploy edge computing infrastructure in multiple locations worldwide, ensuring their services can be accessible and performant for a broader range of customers, including on-prem options for customers. Cloud service providers (CSPs), apart from the hyperscalers, are also investing in edge computing infrastructure to support the needs of their customers looking to process data closer to the source or push application support features closer to devices, reducing bandwidth requirements to the core network. Additionally, many organizations are building regionalized data centers and on-premises edge computing infrastructures to gain more control over their data and reduce reliance on the public cloud. This diverse range of approaches allows businesses and individuals to choose the edge computing solution that best fits their requirements and priorities.